switch (input)

{

case "AAAA":

Console.WriteLine("AAAA branch");

break;

case "BBBB":

Console.WriteLine("BBBB branch");

break;

default:

Console.WriteLine("default branch");

break;

}

Console.WriteLine("Complete");When you look at the IL (intermediate language) that it compiles into, it is essentially the same as a bunch of if and else if statements. Converted back into C# code, it is as if you wrote this:

if (input == "AAAA")

{

Console.WriteLine("AAAA branch");

}

else if (input == "BBBB")

{

Console.WriteLine("BBBB branch");

}

else

{

Console.WriteLine("default branch");

}

Console.WriteLine("Complete");However, if you continue to add case statements, this becomes inefficient. There are a lot of string comparisons that are really expensive. At a certain point, as you add cases, the compiler uses an entirely different technique to handle the cases. It creates a hash table of the strings. The IL looks like this, if it were converted back into C# code (assume there are more case statements):

string s = input;

switch (ComputeStringHash(s))

{

case 0x25bfaac5:

if (s == "BBBB")

{

Console.WriteLine("BBBB branch");

goto Label_0186;

}

break;

case 0xff323f9:

if (s == "AAAA")

{

Console.WriteLine("AAAA branch");

goto Label_0186;

}

break;

}

Console.WriteLine("default branch");

Label_0186:

Console.WriteLine("Complete");The ComputeStringHash method is a pretty simple hash function that looks like this:

internal static uint ComputeStringHash(string s)

{

uint num = 0;

if (s != null)

{

num = 0x811c9dc5;

for (int i = 0; i < s.Length; i++)

{

num = unchecked((s[i] ^ num) * 0x1000193);

}

}

return num;

}This is a version of the FNV-1a hashing algorithm.

The change to using hashing seems to occur at about eight string case statements. The advantage is that there will be, on average, just one string comparison, the other comparisons are all comparing uint values. There is some overhead in performing the computing of the hash, which is why it doesn't use it for small number of case statements.

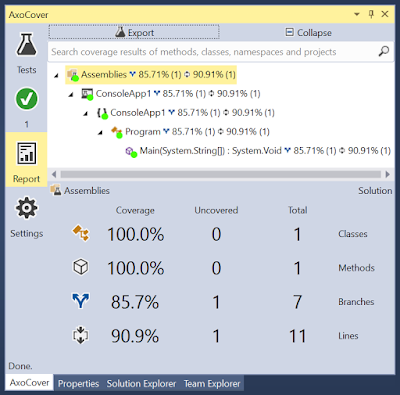

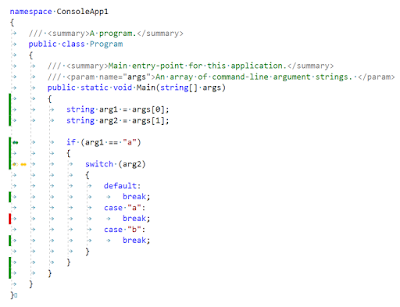

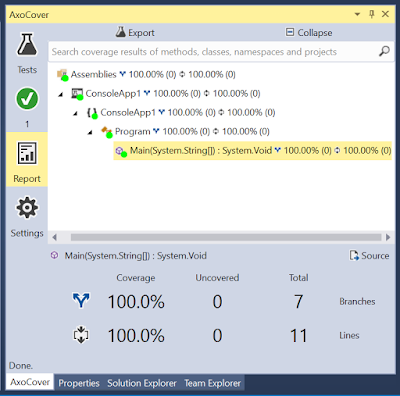

This actually becomes important when you are trying to write unit tests for the code. If you are trying to cover all of the branches in the unit tests, you will need to write code that hashes to 0xff323f9 but is not "AAAA" to get the goto Label_0186 branches to get covered. Your chances of finding something that hashes to the same value as your legitimate "AAAA" string without being "AAAA" is unlikely unless you are specifically trying to get a hash collision. This means that your code coverage will show branches as not being covered, even though you test every case statement in the switch statement. This will show a failure in your code coverage branch statistics (usually around only 60% covered), even though your unit test are actually adequate.

I have been working with the AxoCover and OpenCover programmers to try to get the coverage statistics for branches to be meaningful, but there may be no way to handle this correctly.

Addendum: The logic of the switch statements when it optimizes is slightly more complicated that what is presented above. The C# compiler actually performs a binary search on the hash index rather than just linearly searching through them, before getting to the comparison of the string. Performing hash collisions will raise your coverage to more than 90%, but will not go through all of the code for the binary search.